An Introduction to Transfer Learning

Take a step ahead to make a machine learn from another!

Welcome readers! I hope you are having a great day today. Before we start with technical details, I want to give you an intuition about transfer learning in the real world🌏.

X standard today. Whatever the subjects you are learning in X standard somewhere relates to the respective subjects of X+1 standard, right? To learn multiplication ✖️, we first learned addition ➕.This application of your knowledge, skills, or experience gained from one situation to another is transfer learning 😁. Let us see how this works similarly for machines 🤖. 👇 We'll try to cover up the following points in this blog:

|-- What is Transfer Learning

| -- How to use Transfer Learning

| -- Key questions to ask

| -- What to Tansfer

| -- When to Tansfer

| -- How to Tansfer

| -- Strategies of Learning

| -- Inductive

| -- Unsupervised

| -- Transductive

| -- Benefits of Transfer Learning

What is Machine Learning?

Machine Learning (ML) is a branch of Computer Science and Artificial Intelligence that includes training machines to automatically perform predictions by learning through experience.

Different algorithms are used to build a machine learning model. The machine learning model takes a cleaned dataset as input and learns from it by identifying 🕵️ the patterns in the data. An ML model is selected based on the data which is available and the task to be accomplished ✔️. Machine learning models include linear regression, k-means clustering, decision trees, random forest, etc.

📌 Applications of ML: Weather forecasting, Image classification, Language translation, Recommendation systems, etc.

What is Deep Learning?

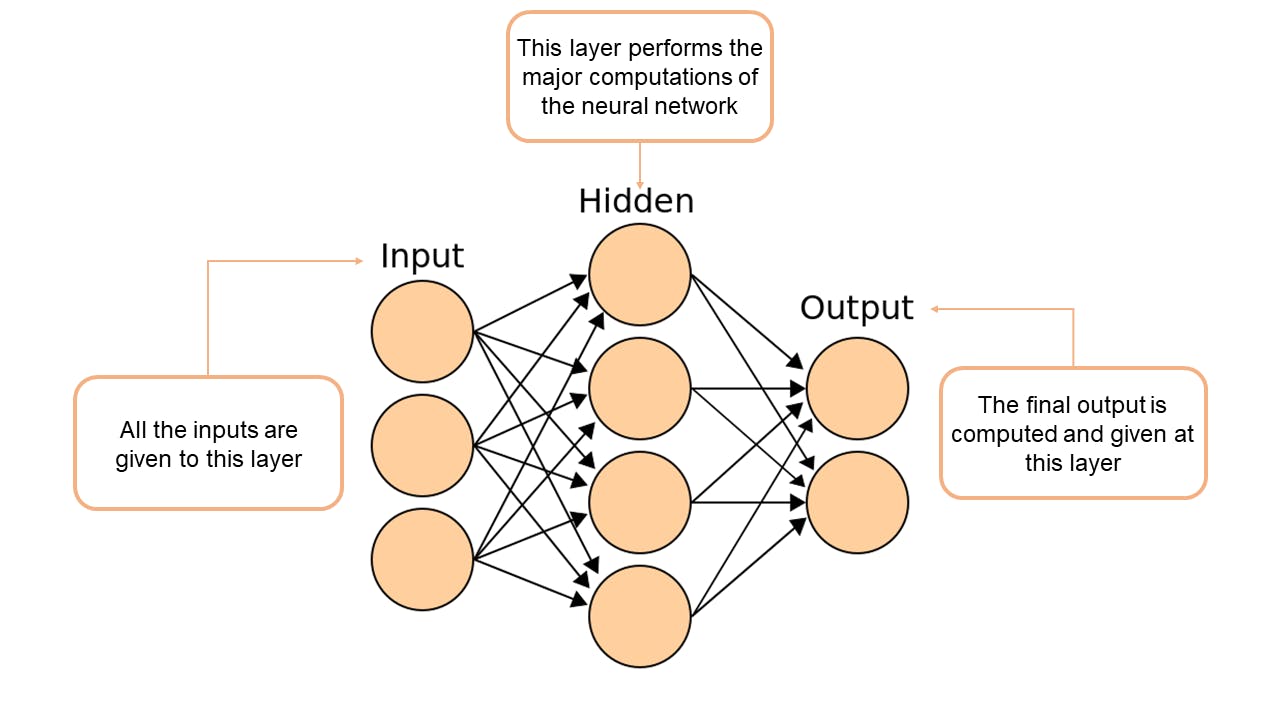

Deep Learning is a sub-domain of machine learning concerned with creating neural networks to solve complex problems.

Neural Network is created with the help of different algorithms that, as a whole, mimics the human brain 🧠. The deep learning neural network consists of the input layer, hidden layer, and output layer. You can see the various layers and their use in the image below.

You can view how to create a simple neural network using Tensorflow here.

📌 Generally, deep learning is used over machine learning when we are needed to perform very complex operations, which require high computing power, on a large amount of data.

Now let’s get started with transfer learning.

What is Transfer Learning?

As per definition,

Transfer learning is the improvement of learning in a new task through the transfer of knowledge from a related task that has already been learned.

There are two terminologies that you must understand before proceeding further.

📝Terminologies used:

Transfer learning 🔀is a type of machine learning 💻in which the output of one model serves as the input to another model belonging to the same domain. Transfer Learning is used to solve complex Natural Language Processing (NLP) 🎤, Computer Vision 📷, Reinforcement Learning 🤖, or Deep Learning problems 📃. It reduces the time and computational power spent on training a complete model with large datasets from scratch. One can take a pre-trained model as a base and create a task-specific model by using transfer learning.

This question must have come to your mind 🤔, why can't we make a neural network from scratch? We can not build a model every time from scratch. Even if we have the data, training the model and optimizing it will take too long. Also, the requirement for computational power is very high. Therefore, using knowledge of a source task ℹ️relative to the target task 🎯makes more sense in practice.

💡 This type of learning where one model reuses the knowledge of another model 🤝can significantly increase the time is taken and efficiency of the output.

How to use Transfer Learning

📌 There are two approaches to transfer learning which are mainly used:

👉 Pre-trained model approach: Many research institutions create models for a specific task that are trained with large and challenging datasets. One can select a pre-trained model from a large number of available models specific to the task. Use this model as the starting point to induce learning in the target model and then optimize the resultant model as needed.

👉 Develop a source model approach: If we don't wish to go with a pre-trained model, we can also create a source model specific to the task. This model must be feature enriched and should consist of features suitable for both the base and target models. Use this model as the starting point to induce learning in the target model and then optimize the resultant model as needed.

Key Questions in Transfer Learning

💡 There are three questions we have to answer to start with transfer learning.

📍 What to transfer: This question concerns what part of the knowledge from the source task is being taken for the target task. Some knowledge may be compatible for just a specific domain, while others may be consistent for more than one domain. We try to clarify what knowledge of the source task can be transferred or reused to get a performance boost in the target task.

📍 When to transfer: The answer to this question directly relates to the quality of transfer that we will have in the end. We have already discussed that when the source and target domain are not the same, they must not be forced to do the transfer. Such transfer can yield an unsuccessful result. Transfer learning without understanding the domain and task can negatively influence the model's efficiency, known as a negative transfer.

📍 How to transfer: Knowing what to transfer and when to transfer, we have to identify the various ways of actually transferring the knowledge, which may include altering the algorithms to make it fit with the target domain and, after doing so, tuning it for better efficiency.

Strategies for Transfer Learning

📍 Inductive Transfer Learning- The source and domains are the same 🔁in this type of transfer learning, but the source and target tasks are different. The source domain is required to use inductive bias to improve the target task. Based on whether we have labeled or unlabeled data, this learning can be divided into two categories, similar to multi-task learning and self-taught learning.

📍 Unsupervised Transfer Learning- This is similar to inductive transfer learning. The source and target tasks are different but are related. The data is unlabeled in both the source and target tasks. Unsupervised learning is used like clustering and dimensionality reduction in the target task.

📍 Transductive Transfer Learning- The source and target tasks are the same in this setting, but their domains are different. There is no data available in the target domain, while plenty of labeled data is available in the source domain. Transductive learning can be further categorized into two types based upon the feature spaces and marginal probability distribution.

Benefits of Transfer Learning

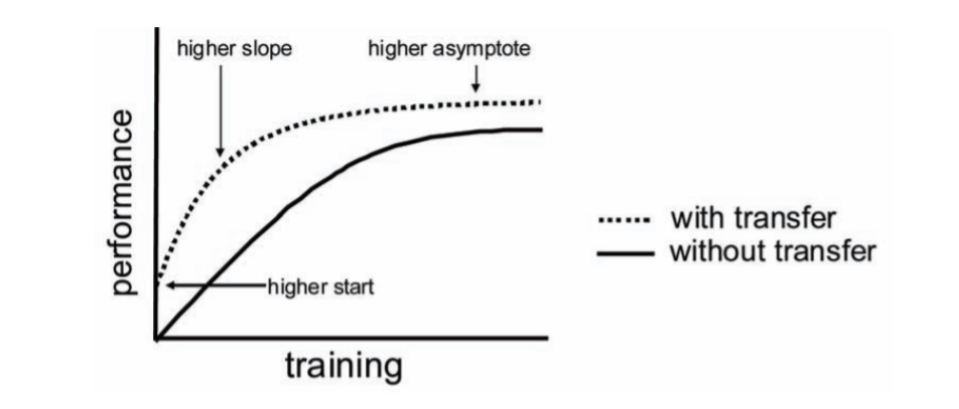

✔️ Higher Start: As the base model is already trained, the target model has a higher start because of its knowledge at the beginning (unrefined stage).

✔️ Higher Slope: The transfer learned model has a better performance and learning curve because of its ability to find patterns or solve problems, whichever the use case, more efficiently.

✔️ Higher Asymptote: The combined capabilities of both the models result in a better asymptote than it usually would be.

💡 Transfer methods tend to be highly dependent on the machine learning algorithms being used to learn the tasks and can often be considered extensions. Some work in transfer learning is in the context of inductive learning and involves extending well-known classification and inference algorithms such as Neural Networks, Bayesian Networks, and Markov Logic Networks. Another central area is reinforcement learning and involves extending algorithms such as Q-Learning and Policy Search.

I hope you have got a clear idea of what transfer learning is 😊. Check out our 👉YouTube channel for more such content.